We have a very conservative network accuracy specification of 5 cm RMSE for our True View 410 “utility” grade 3D Imaging System (lidar and oblique camera combination). When discussing needs with surveyors, I very often hear the magical 1/10 foot requirement (I wish someone could tell me the origins of this specification!). This is a 1.2 inch (3.048 cm) so let’s just say 3 cm requirement. This is achievable with drone lidar if ground control is used and the data are debiased. However, do we really need this for common applications such as topographic mapping or do we just want it because surveyors always want the highest accuracy, irrespective of need?

We were recently working with a surveying company who were interested in using drone-based lidar for topographic mapping. A typical project could be a vegetation (e.g. tree) covered parcel being mapping for land development. The initial data model is used for overall site planning and initial clearing estimates.

I asked how this work is performed if you do not have a drone. The answer is one or more of RTK, digital leveling or total station depending on what was appropriate for the particular situation. Well, we do not need to ask the accuracy of these devices—it is generally sub-centimeter relative to the local differential reference (e.g. base station) or monument. However, the big consideration is the nature of the terrain and the density of profiles or points to be collected. Drone lidar is a very dense collection with an average nominal point spacing of 8 cm or even tighter for a typical True View 410 topo project. This means we are saturating the terrain with an average of about 150 points per square meter.

Now compare this to the sampling that occurs in conventional surveying. If I could collect a point every 10 m, I would have an average sample density of 1 sample per 100 m2. Each of these samples would exhibit very high vertical accuracy (say 1 or 2 cm RMSE relative to a reference) but what happens between these samples? If the area is flat as a pancake, you are good to go. However, if you are working in rugged terrain, all bets are off. The terrain can vary dramatically between your samples, severely degrading overall accuracy. Ironically, the rougher the terrain, the denser you need your samples but the more difficult they are to obtain with conventional survey.

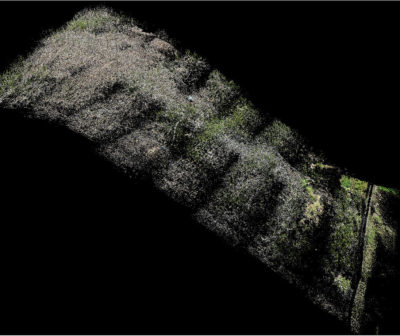

As a simple example, let’s look at a project (Figure 1) we collected of a rugged bit of terrain in the north Alabama Appalachians. The site, the Rock Farm, belongs to David Glenn, our Director of Enterprise Solutions. We flew this site to test mission planning in steep terrain. As you can see in Figure 1, our swath spacing is a bit larger than we would like but density was sufficient everywhere for excellent modeling.

To simulate collecting this project using conventional survey techniques, I performed the following steps:

- Classified ground using our

True View Evo automatic ground classification (2 minutes for

the entire project) - Gridded the data into

50 m × 50 m cells - Randomly sampled one ground point from each cell, moving it to class 23

- Created a Triangulated Irregular Network (TIN) from this sparse class 23 data

- Draped lines over the TIN

- Compared these draped lines to the original ground class

This process will provide us a measure of the degradation of fidelity in the non-observed point sections.

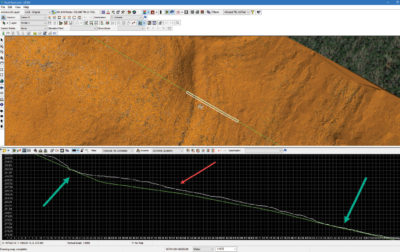

Figure 2 depicts an example of the result of this analysis. The plan view (top) is a TIN of the ground classified points from the lidar data. The profile (lower part of the figure) shows the line draped over the lidar data in white and the profile derived from the simulates survey data in green. The superimposed faint grid has a spacing of 1 m X 1 m. You can clearly see where the “survey” sample points are located (green arrows in Figure 2). Here our simulated survey profile (again, the green line) is tangent to the “true” data (white line). However, as we move away from the survey sampled points, the deviation between approximation and the true surface becomes fairly dramatic. In the vicinity of the red arrow in Figure 2, the separation is about 2 meters!

Now I know you can make all sorts of arguments about careful profiling along break lines, sampling based on terrain and so forth but you would be missing the point (pun intended) by going down that rabbit hole. For anything other than a true planar surface, you would have to collect survey data at the same density as the lidar data to compare single point collection accuracies. In fact (this is fodder for a different article) you could have an RMSE of 25 cm in the lidar data and, if these errors were unbiased and randomly distributed, still have a much more accurate answer than using traditional survey methods.

The bottom line here is that a drone lidar mapping approach with lower per sample network accuracy than the survey gear will always give you a better answer than traditional survey so long as you:

- Use checkpoints to debias the lidar data (“Z” bump—a different article)

- Carefully ensure the lidar data are correctly calibrated. This is easy to do with tools in True View Evo (or LP360 for sUAS)

- Do a decent job with the ground classification (again, easy to do with tools such as True View Evo/LP360, TerraScan, etc.)

I am always very interested in your thoughts along these lines so please send me an email with your comments and observations.