On 30 January 2021, a draft press release from Bay Area start-up Neural Propulsion Systems (NPS) hit the magazine’s inbox1. The fledgling company had a product called NPS 500 that combined cameras, lidar, radar, computer hardware and software into a single offering that it claimed would transform the AV market. We were intrigued and made an approach. The result was an interview with, then a presentation from, founder and CEO Dr. Behrooz Rezvani. We’ve put the gist of his presentation below and the interview responses in a sidebar. Also in attendance was Alysson Do, VP operations and administration, NPS. The start-up is based in Pleasanton, California and has an office in Pasadena, California, close to the California Institute of Technology (Caltech). Already it has more than 40 employees, a few of whom are in Europe.

Behrooz started by commenting that a lot of money has been invested in technology to advance the dream of autonomous vehicles. It is still early days because the technology and go-to-market have to advance significantly before AV vehicles will be commercialized and farther still before there is wide consumer adoption.

Behrooz’s significant insight into this market was that customers will have to believe that AVs are dramatically safer than today’s cars before they will even consider giving over control of a vehicle to an automated system. That means a future of zero accidents. This “zero accidents vision” became the motivation behind all the innovation that NPS has just announced.

Both investors and national and local governments are intensely interested in AV because there is so much at stake and it will transform transportation as we know it.

Two categories of companies are emerging. One makes sensor systems and the other is focused on the perception engine software and navigation, etc. Many problems still persist in the former area and no frontrunner has established itself. That’s where NPS comes into the picture, he explained. As part of his due diligence, he met with a range of potential customers and their enthusiastic responses brooked no other course.

Behrooz emphasized that NPS is focusing on level four/level five autonomy with a sensing system that will enable the company to solve some of the key issues that have held back this nascent industry. The NPS 500 is a very compact system of lidar, radar and cameras with a range of more than 500 m, packaged together and delivered to customers as a complete unit that is ready to be integrated into the vehicle’s computer system.

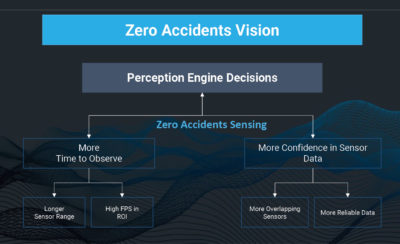

Figure 2: In order to attain the zero-accidents goal, the sensing components provide the perception engine with highly reliable data and more time to do its work.

Behrooz recalled that he had previously started companies that set the bar in their particular fields. For example, Ikanos Communications achieved 100 Mbps over-copper technology, when 6 Mbps was the norm. Quantenna Communications did the first 4×4 and 8×8 MIMOs2. He has assembled a strong team at NPS (Figure 1), including CTO Babak Hassibi, professor of electrical engineering and computing and mathematical science at Caltech, and executive adviser Heinrich Woebcken, formerly CEO of Volkswagen North American and SVP at BMW, to ensure that NPS focuses on what’s really important to automakers.

The company was founded in late 2017 in California, and its work, he emphasized, has involved considerable innovation. To be successful, the company must deliver on this innovation while also developing for the large volume that will be critical to grow at scale.

Behrooz believes that the zero accidents vision should be adopted by the industry as a reset to the negative publicity that has surrounded early AV accidents. Over 18,000 articles were written on Uber’s accident in Arizona and 8000 on Tesla’s autopilot death3. Serious, negative publicity is also usually followed by lengthy NHTSA4 inquiries. Behrooz further pointed out that, while society at large accepts human errors because we’re all human and we all make mistakes, people don’t like to see technology failures. The airline industry is also driven by public opinion issues. Airline safety has increased so dramatically over the past 25 years that few people think about the issue (737 Max aside). For large-scale AV adoption, and to dampen negative publicity and lawsuits, the goal must be less than 100 fatalities per year.

How do we accomplish the vision of zero accidents?

The solution emanates from the two elements he had mentioned earlier, the sensing and the perception engine, the latter being the brain that navigates through the “tough spot” and has to make the right decision. What is the sensing system supposed to do for the perception engine? Logically, the former has to give the latter enough time to interpret the results and navigate properly (Figure 2). It must also provide more confidence in the sensor data. There are two ways to get more time. The system has to deliver data from a longer range and needs to be able to operate at very high frame rates in the congested fields in the short ranges. That’s similar to watching sports on TV: if you want to look at sudden movements, take the frame rate really high—television can show frame-by-frame what happened. Furthermore, all the sensors have to be tightly overlapped. Finally, the data must be so reliable that the perception engine trusts it. This is the zero-accident sensing platform.

Figure 5: Side view of NPS 500 mounted on Jaguar i-Pace. The product is also available in black, blue and white.

How does that translate into requirements? Close to 80% of US roads are two-way, i.e. one lane each way with no separator (Figure 3), maybe more in other countries. Many perception engines need seven or more seconds in order to properly navigate. Today, the typical range is 250 m, which translates to two cars approaching each other at 35 mph. That’s not what happens on the majority of roads, where the figure is closer to 70-75 mph. Thus NPS estimates that at least 500 m range is needed to reach the goal.

In an AV study by AAA, cars turning a corner at 15 miles per hour, all with ADAS systems, without exception hit a pedestrian. Thus two things are important from the NPS perspective. One is to be able to detect a pedestrian before reaching a corner (Figure 3). The other is being able to detect cars moving through a cross-section. Whether there are signals or stop signs or not is irrelevant, because some drivers may miss or not comply with them. These are key elements of what Behrooz considers a zero-accident platform. The other piece is being highly adaptive and able to scan fast in a congested cross-section so that subtle movements are picked up. NPS has concluded that it’s essential to pick up these movements in order to be able to prevent accidents. Finally, while many sensor technologies such as lidar and cameras are line-of-sight systems, there are many situations where line-of-sight is not sufficient. Systems must be able to penetrate through vegetation, for example, to capture what may be missing (Figure 3).

The NPS 500 is designed to be a solution for any car anywhere. It is based on the company’s internal development of light and lidar technology, which it calls solid-state MIMO lidar. It is a super-resolution system with a range of 500 m. Complementary radar technology is integrated into the platform, with a resolution 10 times better than what’s generally available. NPS radar has 360° simultaneous detection and, critically, it is resilient against other radar technologies. NPS claims that it performs 70 times better than other radar technology. Resilience in the presence of other sensors is important as people are going to put more and more of these on the road, which could become a problem.

There are nine cameras on the NPS 500. Behrooz proudly exclaimed, “We have our own silicon at the edge, in aggregate multiple silicons networked tightly together with a processing power of approximately 650 terabits per second. To put that into perspective, that’s roughly twice as much as the supercollider in CERN that is processing particle detection. We’ve also put together the software necessary to detect and see round the corner for the first time. These are the highlights of our sensor technology.”

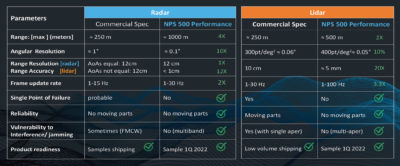

Figure 8: Performance requirements of level 4/level 5 integrated system, with NPS 500 statistics in columns 3 and 5, against typical existing products in columns 2 and 4.

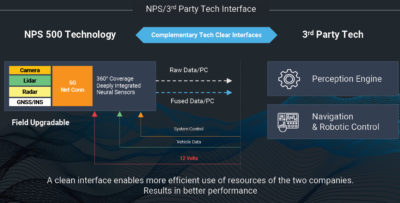

How does it look? It’s a two-piece 360º system installed on the roof (Figures 4 and 5). The outward appearance and dimensions are not yet finalized, but the product will closely resemble these renderings. The system is scheduled to become available in the fourth quarter of 2021. The components are summarized in Figure 6. On the left side of the schema (Figure 7) is the perception engine and navigation control of any third party or any OEM and on the right is the NPS integrated platform with simple connections to the perception engine, system control and vehicle data. NPS provides point-cloud data and fused data, and a 12V cable from the battery.

Asked to make comparisons with competitors, Behrooz quicky acknowledged that there are clearly many good lidar companies, such as Luminar and Velodyne Lidar. In terms of range, however, he claimed that NPS out-performs them by a factor of two; in terms of resolution, at least 10% better than the best resolution published. “We believe the kind of lidar technology that we are bringing with AI and silicon, it’s really a class by itself,” he elaborated. “I think you will find that most experts on the electro-mechanical-scanning lidar technology are talking 250 m, and even less on solid state—closer to 200 m. And the best resolution that we’ve seen is 0.1º. We’re obviously better than that. We do a statistical method of computation of point clouds and we have been able to measure up to 0.01°.” He thought that Luminar has claimed around 0.07º by 0.03º and that Ouster has 0.1º x 0.1º. Indeed, 0.1º is typically considered the standard goal. “And, based on our average results, we’re better than 0.05º.” We explored the lidar technology a little further, though Behrooz was reluctant to give too much away. “NPS does not have a moving lidar,” he said. “We execute many small flashes, that collaborate and cooperate. That’s why there’s no dead space between any beams. All currently developed lidars, with the exception of a few that have a limited range, are flash types. They follow a similar concept to the electro-mechanical scanning. Ours is all solid-state.”

Behrooz also claimed a significant competitive advantage on the radar side over, for example, ZF, Continental or Bosch. The radar is multiband: one of the biggest breakthroughs is that NPS uses four bands scanning simultaneously. This is a huge advantage because the typical frequency used in AV radar today is millimeter-wave at 77 GHz. In order to penetrate material, NPS has to go to much lower bands in order to see through occlusions and the like.

Behrooz also claimed a significant competitive advantage on the radar side over, for example, ZF, Continental or Bosch. The radar is multiband: one of the biggest breakthroughs is that NPS uses four bands scanning simultaneously. This is a huge advantage because the typical frequency used in AV radar today is millimeter-wave at 77 GHz. In order to penetrate material, NPS has to go to much lower bands in order to see through occlusions and the like.

Yet the most apt comparison is against other fully integrated sensor systems that are level four or level five, and these are built by a handful of companies such as Waymo and Argo AI. NPS judges that Waymo is the farthest ahead amongst these integrated sensor systems. Figure 8 gives a comparison. Behrooz anticipates at least two times better reaction time than the current sensors used by Waymo. He expects, based on the mathematical algorithms and test results, to be, “… orders of magnitude better—we believe at least five times better than our nearest competitor.” Waymo, Argo AI and some of their competitors, Behrooz admitted, fully integrate with the perception engine, i.e. the whole sensor system is designed as it should be—the sensors are potentially fully redundant and share full coverage with each other. In that category, however, Behrooz contended that NPS is probably 10 times better than its nearest competitor. He made a comparison with the sensors in the Waymo Gen 5 fifth-generation system that came out in April 2020.

NPS does not use the sort of high-resolution, high-definition map database that most AV developers who are making use of lidar and other sensors require. Rather, the NPS 500 provides fused information, such as GNSS position and target classification, but NPS leaves it to the perception engine software to do the map.

Behrooz summarized. NPS, he claims, is the first of its kind as a sensor system company that has integrated cameras, lidar and radar, in a fully redundant way. Its ultra-long range has significant amounts of AI and silicon in it. “Essentially, we set new goals or standards for getting to the vision, which I think we all should shoot for—

getting close to zero accidents.”

2 Multiple-input and multiple-output components, for wifi in this case.

3 Behrooz was referring to the 2018 accident in Mountain View, California, but another accident involving a Tesla car, in Houston, Texas, on 17 April 2021, took place while this article was being completed and is generating its own cloud of articles and reports.

4 National Highway Transportation Safety Administration.

After the pitch, the interview … Behrooz Rezvani reveals more about himself

I put some questions to Behrooz that are less product-focused, to try to learn more about him as a person and, from that, discover what sort of company he has created.

LM: Behrooz, you’ve had a remarkable, highly distinguished career, which continues on a steep, upward trajectory. You have undergraduate degrees from Hamline and North Dakota State, an MS from the University of North Dakota and a PhD from Marquette. You have multiple patents and innovation awards. After graduating, you worked in various leading-edge companies and in more recent years you’ve been a founder and c-level executive in several of them. Could you please summarize your life and career, and also indicate the drivers that caused you to make the various changes of direction along the way? Looking back through the years, do you see any relationship between your PhD work and today’s lidar sensors?

BR: Wow—this is a very deep question. To explain why I started NPS, I have to say a few words about how my internal compass works and guides me. I get really excited about solving a new problem that requires a complex solution—but efforts to date have not been all that successful in L4/L5 autonomous driving. The solution must also give back to society and impact our lives. So those are the necessary conditions for me to take on a new challenge.

In a way, everything I have done in my career prior to NPS prepared me to understand one of the most challenging engineering tasks of our time. Autonomous driving/transportation is one of the biggest challenges today and will certainly profoundly impact everyone on the planet.

From a technology perspective, the sensing problem is quite complex, especially when focusing on the zero accidents vision. We are detecting many different kinds of objects with different material composition under adverse weather and road conditions, all while minimizing the potential for error.

When one examines this problem at a deeper level, we have many issues to address. Some of the big ones are: the many aspects of the physics of propagation; EM wave interaction with material provides boundaries where information theory, mutual information and causality are involved; and we need custom chips to do supercomputing at the edge.

At General Electric, my first full-time job in industry, while pursuing my Ph.D., was in the NMR group (nuclear magnetic resonance; now known as MRI—magnetic resonance imaging). I learned a lot about how radio waves interact with the human body.

LM: Please tell us about the founding of NPS. Why did you choose Pleasanton, California?

BR: I wanted to be close to 680/580 interstate crossing. Those familiar with the San Francsico Bay Area will understand that this location is close to UC Berkeley, Lawrence Livermore Lab and San Jose, giving NPS the ability to attract talent with expertise in optics, chip design and computer vision. However, our first office is near Caltech/JPL in Pasadena.

LM: Can you say anything about your leadership team and how you found the people? You gave some information in your presentation about yourself, the professor from Caltech and the top manager from the automobile industry. As to the other members of your leadership team, it’s interesting how folk in Silicon Valley get together. You’re all highly able, very highly qualified. How do you get a leadership team together? What makes them different?

BR: My goal when we start a company is to make sure we are going to be the best in the world. That’s really the only way to go forward. I remember my first job at GE Medical. I was working MRI prototypes, and Jack Welch had a saying that, “You’re either number one or number two in your market or I close you down.” Number one is a big, big thing. So then I ask, who are the number ones, you know, the top 0.1% of the class, then I search for people. Some I may not be able to convince, but in that 0.1% a chemistry is developed, and a good leadership team emerges. One of our team members, Amin Kashi, was a vice president of the electric vehicle company NIO. Quite a diverse but complementary team!

LM: I’ve seen a draft of your press release about the NPS 500 and it includes the words, “Neural Propulsion Systems (NPS), a pioneer in autonomous driving sensor solutions, today emerged from stealth to launch NPS 500™, the safest and most reliable autonomous vehicle driving system that enables the industry to reach zero accidents vision.” Wow! Before we talk about the technology, can you please explain the words “emerged from stealth”?

BR: “Stealth” is used a lot in the start-up world of Silicon Valley. There are good reasons. Small companies don’t talk about what they are planning, because they don’t want the attention of direct or indirect competitors. So for that reason we did not have a website until a few weeks ago, to coincide with our product launch.

LM: Now tell us about the NPS 500. From the press release, I understand that it’s a lot more than lidar—it combines lidar sensor(s), radar sensor(s), chips and AI fusion software. Perhaps it also accepts inputs from cameras. Does this mean that you provide a complete solution for the autonomous vehicle (AV) developer? In particular, I’d like to know more about your claim, “World’s first capability to see around corners and full sensor coverage in excess of 500 meters.”

BR: That’s correct. It’s a complete solution and it’s the world’s first all-in-one deeply integrated multi-modal sensor system focused on level 4/5 autonomy. It’s a new sensor-fused system that precisely interconnects the NPS revolutionary solid-state MIMO LiDAR™, super-resolution SWAM™ radar and cameras to cooperatively detect and process 360° high-resolution data, giving vehicles the ability to prevent all accidents. The densely integrated sensor system enables vehicles to see around corners and over 500 meters of range with ultra-resolution accuracy together with highly adaptive frame rate. The NPS 500 breakthrough capabilities make it 10x more reliable than currently announced sensor solutions.

From a super high level:

- Cameras provide high-resolution images, but lack depth information and depend on lighting conditions

- Lidar provides super-precise depth information, but its performance and reliability degrade in adverse weather and light conditions, and it can get occluded fairly easily

- Radar measures velocity with great precision, but has lower resolution than lidar and is vulnerable to interference from other radars.

LM: Can you please tell us about the solid-state MIMO lidar and SWAM radar sensors that are components of NPS 500? Did you develop these? Do you foresee any of your technology working with other suppliers’ lidar and radar sensors in some sort of hybrid solution? Having both lidar and radar avoids the energetic debate about which is better in AV applications—that’s good—but what about cameras?

BR: Our solid-state MIMO lidar is a revolutionary new concept that is composed of many small flash lidars. Working cooperatively together in a precise combination of time and space, they illuminate many fields of views (FoV) independently. The optical receivers are distributed spatially across the wide aperture of NPS 500 and are precisely timed to sample the FoV of interest, hence the name MIMO lidar. Because of this architecture we can have a very large group of independent laser beams that independently track hundreds of targets at up to 100 fps (frames per second), necessary for the zero accidents vision that the industry seeks.

We also developed super-resolution SWAM radar, which is a new class of radar technology with 10x better detection reliability, simultaneous, multi-band 360° field of view and 70x better against other radar signal interference.

LM: Does NPS do its own manufacturing or do you outsource?

BR: We can easily work with Tier 1s and ODMs1.

LM: Are your customers the AV developers? What is your distribution chain, i.e. do you work directly with your customers in the AV world or do you have distributors?

BR: We are currently working with

AV developers and other customers.

LM: May I please ask something that’s of particular interest to the readers of LIDAR Magazine? Do you envisage your solutions being used in any applications other than road vehicles, e.g. UAVs?

BR: Absolutely. We are really about a zero-accidents platform in any transportation. And obviously, our sensing platform can lend itself to many other applications.

LM: Bringing NPS 500 to market is clearly an enormous, daunting technological achievement. You are fully committed to it. Nevertheless, I would like to ask, what do you see happening in the remainder of 2021 and beyond? What other huge innovations is NPS working on?

BR: Obviously NPS 500 is the first product in a family of products we are working on and I hope to discuss them in the near future.

LM: Behrooz Rezvani, thank you very much indeed for taking time to talk to LIDAR Magazine and for explaining Neural Propulsion Systems’ remarkable technology. We wish you well in your endeavors.

1 Original design manufacturers.