On 7 January 2021, Seoul Robotics, a Korean start-up, created a stir when it launched Discovery, a first-of-its-kind “plug and play” product in the lidar space. It consists of both hardware and software, but its heart is SENSR1, Smart 3D perception Engine by Seoul Robotics, for tracking vehicles and people. We wanted to know more and put some questions to Seoul Robotics CEO, HanBin Lee (HL), who likes to style himself “Capt’n”.

LM: First of all, tell us about SENSR. It’s software, but what does it do? You describe it as “sensor-agnostic perception software”. Do any of the lidar sensor manufacturers offer software like this for their own sensors?

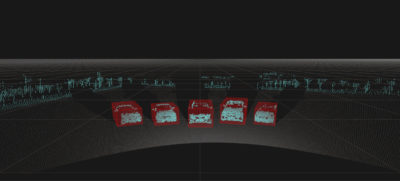

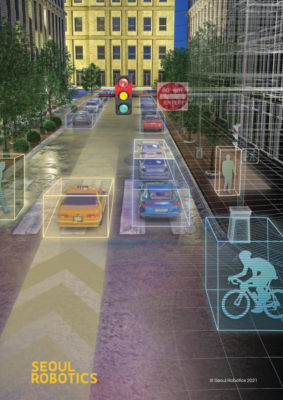

HL: SENSR is really the backbone of Seoul Robotics. It is our proprietary perception software that uses machine learning to analyze and understand 3D data to support a range of functions from basic tracking and monitoring to autonomous mobility. It’s incredibly accurate even while operating at a very high processing speed. The unique feature about SENSR is that it is sensor-agnostic and is compatible with more than 70 different types and models of 3D sensors. Any time a new lidar or 3D sensor is released to the market, we train the software for the new sensor. This has been a huge advantage for our partners, as they are able to select the type of sensor that best works for the application they need.

For many years, perception software for lidar sensors was being made in-house at self-driving car companies. It is a massive undertaking to develop and many people outside the AV industry just didn’t have the resources to dedicate to building their own software. What we’re trying to do at Seoul Robotics is bring lidar technology to the masses and show the world how versatile the technology can really be.

For many years, perception software for lidar sensors was being made in-house at self-driving car companies. It is a massive undertaking to develop and many people outside the AV industry just didn’t have the resources to dedicate to building their own software. What we’re trying to do at Seoul Robotics is bring lidar technology to the masses and show the world how versatile the technology can really be.

LM: We’ll come back to SENSR soon, but next please tell us about Seoul Robotics. What is the company’s history; how big is it; how is it funded; does it have offices in addition to the Seoul headquarters; what other products does it offer?

HL: My co-founders and I met online while taking a course on machine learning from Udacity. We were running a company remotely even before it was the norm! We actually didn’t all meet in person until a year after we founded Seoul Robotics.

Each of us has a background in 3D data processing and we were interested in learning more about developing AI and machine learning systems. In 2017 we teamed up for a competition held by Udacity to develop a software system for self-driving cars. At the time, many people were using other sensor modalities—camera, radar and lidar—but we challenged ourselves to see what we could do if we relied on data only from lidar sensors. We ended up coming in 10th place out of more than 2000 teams, which really validated the application of applying machine learning to lidar for mobility.

This type of AI-integrated lidar software was really available only for the self-driving market, but as the price of lidar sensors has continued to drop over the last several years, we saw an opportunity to bring this technology to additional markets. Seoul Robotics has become a bridge between lidar manufacturers and companies and organizations that can benefit from having more insight from 3D data.

It’s been a busy few years! In 2019, we raised $5M, and we’ve been quietly expanding operations and partnerships around the globe. Our team of 30 is growing, and we’ve got offices in Seoul, Silicon Valley, Munich, and Detroit. Currently, we are funded by global financial institutions in South Korea, Hong Kong, and Australia.

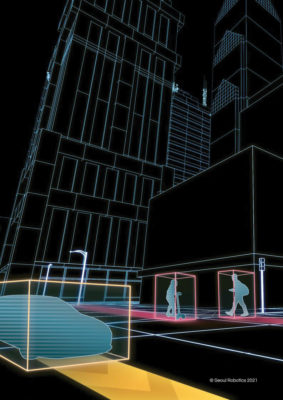

In addition to SENSR, we recently launched Discovery, which is an all-in-one sensor and software system—the first-of-its-kind lidar processing unit (LPU). Our goal with Discovery is to simplify the lidar experience and lower the barrier to entry for companies looking to implement 3D data insights into their systems and processes. It used to take up to two years if you wanted to deploy a lidar-powered application for a specific need. We know this from experience! Now, with Discovery, we can reduce this to just a few minutes. Discovery is truly “plug and play” and quickly turns any lidar sensor into an IoT device. We send everything you need—software and hardware—to quickly set-up and begin benefitting from lidar-based solutions1.

LM: What about yourself? Tell us a little bit about yourself and the path you followed in order to take the helm at Seoul Robotics.

HL: I went to Penn State2, where I was briefly exposed to CFD (computational fluid dynamics), which is essentially simulating moving 3D point clouds in Linux. After college, I served in the Korean military for two years as a tank engineer, where I was briefly exposed to lidar. When I left the military, I got really into studying machine learning and AI and began taking online classes with Udacity. It was really exciting to be a part of the online community. Many people would keep sharing their breakthroughs and students were able to confirm the latest code right at home.

It wasn’t until Udacity put together a self-driving competition, which allowed me to meld 3D point clouds and machine learning—that I really figured out that we had something special. This combination of technologies just made sense to me. In 2017, the camera software company Mobileye was sold for $15B USD3. When I looked at the lidar space, I realized there was no Mobileye of lidar. So I thought to myself, someone needs to make software for all of these lidar sensors because lidar is like a camera. It is just a raw imaging sensor, but it is only as good as its software. I knew that 3D computer vision—processing 3D point clouds with machine learning—was the future and would be a must-have ingredient for anything related to autonomous systems. We founded Seoul Robotics and here we are today!

LM: Could you please describe Seoul Robotics’s current customer base?

HL: Our current customer base is extremely varied, which is exactly what we had hoped when we started the company! We’re working with companies across multiple industries as well as with lidar manufacturers on deployments. In the retail space, we’ve currently got contracts with one of the largest retailers in Korea, as well as a partnership with Mercedes-Benz to provide information on the customer journey within its showrooms. We’re also partnering with multiple Departments of Transportation on smart city applications—our latest just went live in Chattanooga, Tennessee—and we are a part of the Qualcomm Smart Campus. Mobility customers will also be an important part of our business. We’re currently working with BMW to automate logistics as well as with the team at the University of Michigan’s Mcity on smart city and autonomous mobility applications.

LM: Your background and that of Seoul Robotics are heavily slanted to autonomous vehicles, but lidar is used in multiple ways. What non-automotive markets are exciting for you? Are you actually involved in robotics?

HL: We do get asked the robotics question a lot! The answer broadly is, yes. At the end of the day, 3D computer vision is the main component of any mobility system. We’re helping robots and machines of all kinds understand and perceive their world. That said, the non-automotive markets are just as exciting for us. Lidar has long been talked about as an AV technology, but the core components of mapping and tracking can be used for so much more. It’s been incredibly exciting to be able to drive the expansion of the technology into new markets.

One of the most interesting use cases for us has been within the retail industry. Lidar has the ability to help stores understand how long customers are queuing, the time it takes for a customer to check out, where customers are spending their time and how they move about the store. Stores haven’t been able to track these types of insights until now.

Seoul Robotics headquarters team in high spirits. They can also be seen on the company’s website, holding some of the lidar sensors that the company’s products support.

LM: The sensor-agnostic feature of SENSR is critical and your website has photos of many of the lidar sensors with which you interface—Hesai, Intel, Ouster, Velodyne Lidar etc. Our readers, of course, are familiar with many of these sensors. Are you continuing to add more sensor suppliers to your list of partners? And please let me ask you a slightly different question. Do you, or do you plan to, interface with the lidar sensors on the iPad Pro and iPhone 12 Pro?

HL: Absolutely. We are continually looking to work with new sensor manufacturers and help bring their sensors to new markets. As I mentioned, anytime a sensor is released, we will train our software to be compatible with the new sensor.

Right now, the lidar on the iPad Pro and iPhone 12 has limited computing resources and you can’t really build new algorithms on top of it at this point. However, it is something we are keeping an eye on and could potentially look into in the future.

LM: Do you foresee Seoul Robotics participating in any way in the airborne survey world, i.e. the processing of lidar data captured from the air for the purpose of generating geographic information, or is this too much of a niche market?

HL: We’ve actually had several conversations with mapping and survey companies around the world looking to automate the processing of geographic information. I’m very interested in this space and there are a few startups that we’re keeping an eye on. This is a very natural industry for Seoul Robotics. Stay tuned!

LM: You have built relationships with several significant high-tech companies—BMW, EmboTech, Mercedes-Benz and Qualcomm. Could you please expand on these relationships? Are there any other important partners that you want to talk about?

HL: While we can’t dive into the specifics of these partnerships, I’m happy to provide an overview of where we are today.

Last December, Seoul Robotics was selected as a Tier 1 software provider for BMW Group. Together with Swiss directional software start-up EmboTech, we’re working to develop a SaaS fleet automation platform that will be used for automated functions for various internal logistics and assembly processes at BMW’s HQ in Munich. This is a fast-moving project and we hope to have more to share here later this year!

Mercedes-Benz is actually a retail partnership. We’ve worked with them to install our Discovery product in their retail showrooms to better understand which vehicles are attracting the most attention from customers. We discovered that nearly 60% of customers spend their time looking at the trunks of vehicles and that it is important to provide more access to all sides of the vehicle on display.

Qualcomm is building a Smart Campus and has selected Seoul Robotics as the software provider for its smart city accelerator program. We feel strongly that smart cities are going to start seeing the benefits of lidar and 3D data and wanting to implement this technology soon. Cities want to provide safer spaces for pedestrians and drivers and lidar makes that possible. This program will be the blueprint for cities around the world.

We also just announced a partnership with Mando, the global auto parts manufacturer to car makers like Hyundai and Kia Motors. Mando will combine SENSR with its smart sensors, and together we’re working to develop all-in-one, hardware-software solutions for mobility applications spanning autonomous vehicles, smart cities, smart factories, and unmanned robots.

This year will be very important for our business and we have so many exciting things in our product and partner pipeline. We can’t share these just yet, but be on the lookout! The best place to check-in for company updates is on LinkedIn4.

LM: One of your partners is the University of Michigan. This is interesting, because we had an article in the magazine about an Ann Arbor firm called May Mobility that uses multiple sensors, including lidar, in autonomous vehicles, which are operational in Detroit5. Can you say more about your links to the University, please?

HL: One of our offices is located in Detroit. This is the center of so much innovation in the mobility space and it made sense to have a presence here. For many years now, we’ve worked with the University of Michigan and its Mcity program on a variety of mobility and smart city applications. Each year, we also run an internship program with the University and Mcity and work with students to build new algorithms for our software and continue to expand its applications.

LM: How are you approaching getting Discovery into the market? Do you have, or plan to have, a distributor network? What sort of pricing model do you use?

HL: We have an incredible team of veterans within the lidar space, whom we will formally announce later this year. Their expertise and relationships have been invaluable to the company as we have grown and deployed our product over the last few years. In addition to this expertise, we also work with distributors around the world and currently have partners in APAC, EMEA, and North America.

LM: I’ve learned from your website that you drive a Tesla but, unlike Mr. Musk, you trust lidar. Would you like to say more?

HL: I drive a Tesla daily. It’s really a fantastic car, but I have gotten a few scratches while using autopilot. Given that I do drive in a very densely populated area of Seoul, the limitation is quite severe.

I truly feel that the future of mobility is going to rely on 3D sensors. Cameras and 2D systems just do not provide the insight that is needed for safe autonomy at scale. Our hope is that anywhere a camera or 2D system has previously been in place, it will eventually be replaced by 3D sensors.

Even Tesla is trying to jump from 2D systems to 3D sensors—they want to jump straight into 4D imaging radar, which really is another 3D sensor. There will be no way to get around processing 3D data, and Seoul Robotics is here when people recognize this and need help. In a sense, I trust in 3D sensors, and right now, lidar is currently the best one there is.

Lastly, I know Mr. Musk publicly denied the use of lidar, but I think he does understand its importance. Why else would he use it on a recent SpaceX launch! Recently in a talk on the new audio chat app, Clubhouse, he specifically mentioned that he did build lidar for SpaceX. According to him, if he didn’t trust the technology he wouldn’t have done so. So while we might not see it on Teslas, it’s encouraging to hear this from someone so highly regarded in the mobility space.

LM: HanBin, thank you for taking time to answer our many questions. We look forward to learning more about Seoul Robotics’ successes in the future as SENSR and Discovery diffuse into the market-place and the range of applications grows.

1 Both SENSR and Discovery are described in detail on the firm’s website, www.seoulrobotics.org, but the operation of the products is easier to understand through its YouTube channel, https://www.youtube.com/channel/UCcfXs-yXfkN3VfhAwUTooFg.

2 Pennsylvania State University, www.psu.edu.

3 By Intel: see https://lidarmag.com/wp-content/uploads/PDF/LIDARMagazine_Walker-Intel_Vol10No4.pdf.

4 https://www.linkedin.com/company/seoul-robotics/

5 https://lidarmag.com/2018/09/24/autonomous-vehicles-operational-thanks-to-lidar/