Taking advantage of travel to Toronto, Ontario, Canada for a meeting of the Council of the International Society for Photogrammetry and Remote Sensing, managing editor Stewart Walker Ubered to the suburbs, to visit Applanix, the acknowledged pioneer and lead player in GNSS/IMU technology for geospatial purposes. How were they doing? What changes have they made since we last visited in 20081? Are they still first-in-industry? Here is what he found.

Editor’s note: A 3.8Mb PDF of this article as it appeared in the magazine—complete with images—is available HERE.

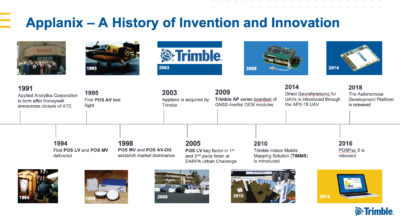

Applanix is housed in a conspicuous, modern building in Richmond Hill, Ontario, Canada, an area of light industry and professional activity amidst Toronto’s northern suburbs. After quick words with sales manager Kevin Perkins, I was welcomed by marketing communications director Andrew Stott and director for airborne products and inertial technology Joe Hutton. Andrew joined Applanix in 2008, whereas Joe was one of the first employees when the firm was founded as Applied Analytics Corporation by Dr. Blake Reid, Dr. Bruno Scherzinger and Eric Lithopolous in 1991. Applanix was acquired by Trimble Navigation in 2003. While its marketing materials reflect this and staff are quick to praise Trimble’s role—as both a supplier of GNSS technology to Applanix and as a market for Applanix’s position and orientation systems—a degree of independence has been maintained, so that the nimbleness, employee loyalty and sense of adventure often found in start-ups and small firms have not been diluted.

The company’s efforts are divided into three main areas—airborne, marine and land. The third includes autonomous vehicle technology and indoor mapping solutions—Applanix produces the Trimble Indoor Mobile Mapping Solution, TIMMS. For these environments Applanix offers a number of specific, customized POSTM products including:

- POS LV for position and attitude (pitch, roll and heading) measurements for land vehicles. Applications include: mobile data collection, GIS and road surveying.

- POS MV measures the motion of multibeam echo sounders on marine vessels for seafloor mapping applications

- POS AV measures the position and attitude of airborne sensor platforms for aerial survey and mapping applications

- POSPac MMS post-processing software designed for the analysis of POS data

- Trimble AP GNSS-Inertial Board Set plus Inertial Measurement Unit (IMU): Applanix POS products for mobile mapping and positioning are available to original equipment manufacturers (OEMs) as AP Boardsets

- APX-UAV product line: single board GNSS-inertial solutions for direct georeferencing from UAVs

- Autonomy Development Platform: navigation solutions for autonomous vehicles research and development

Applanix continuously evolves, adapting to the needs of its partners to help orient their businesses for success. For nearly 30 years it has offered complete and customized solutions while championing the technology revolution that allows pinpoint positioning in any conditions.

Hutton focused immediately on the rapidly growing UAV-lidar market. LIDAR Magazine was privileged to receive a stream of his thoughts, which we present here, practically unexpurgated!

LIDAR Magazine (LM): Please give your thoughts on where Applanix is today in the airborne market, 28 years after the founding and 16 after the acquisition by Trimble.

Hutton: The hot topic is UAV-lidar. We supply RIEGL with all its mobile, airborne and UAV systems. We supply Teledyne Optech2. We have many new partners who are building very high-performance UAV-lidar solutions around our products. They’re frequently using the RIEGL or Velodyne LiDAR scanners, sometimes FARO.

APX-20 UAV—Applanix has developed a lineup of APX boardsets for professional mapping from a UAV for any kind of application.

From my perspective, what is really interesting is how quickly the UAV-lidar market is picking up. People are realizing the high quality of the data they can get from the air on these lidar sensors. They’re not doing big areas, just high-accuracy, high-resolution surveying that used to be done with total stations or topographic static scanners—but they’re doing it more efficiently. What’s surprising to me too is that, yes, they are flying FAROs with millimeter resolution, but even the less accurate Velodynes are enjoying huge acceptance and uptake. You may have 5 cm of “snow”, but these sensors are good enough, say, for power-line surveys and you can see through the foliage. We’re seeing all sorts of entrants, new lidars driven by the autonomous vehicle (AV) market—Quanergy, Cepton, DJI and so on. The target is AVs but you can produce interesting geospatial data very cost-effectively. We recognize that even these, on the UAV and at low altitudes, need very good georeferencing. So that continues to be our focus. That’s why we came out with our APX product line four years ago. We saw the UAV market crystallizing and thought it was going to be all about photogrammetry, but now it’s really turned out to be about lidar. You can actually get a point cloud while you’re flying! Andrew and I attended the YellowScan user meeting [the week before this interview was conducted] and saw excellent lidar payloads based on Velodyne and RIEGL, with our product, nicely integrated and almost push-button.

Some competitors are now selling their own lidar solutions. What gives us a competitive edge is that we don’t just sell a GNSS/IMU product, we sell a solution in the sense that we work with the OEM, understand what they’re trying to do, sit with them and hold their hand until that pixel, that point is at the correct location on the ground, exactly meeting the requirements. We can do this because we have the depth and the domain expertise to be able to understand georeferencing. YellowScan is the perfect example: we worked with them, developed the optimum solution, and it’s been very successful. We don’t leave a customer hanging.

LM: Do you know why that is?

Hutton: Historically, when Applanix was first bought by Trimble, we were starting to branch out into solutions, for example MMS. Then we decided that, as part of Trimble, we should return to our core, supplying the georeferencing solutions. TIMMS is an anomaly—the only real complete solution that we sell.

The Trimble AP60 GNSS-Inertial System is an embedded GNSS-Inertial OEM board set plus Inertial Measurement Unit (IMU) in a compact form factor

We don’t even sell the DSS medium-format airborne camera any more, which came from TASC then Emerge. We still have the DSS technology, however, which we now sell through partners. We don’t calibrate cameras and so on, we educate our partners on how to do it, how to integrate solutions. We supply some software, help them generate real-time orthos etc. Our job is to work with all the people trying to do aerial mapping and get our technology in there to help them do that.

We’re not getting stale, because we realize the only way we can keep our clients happy and successful is to bring them all the new technology that comes to market. We invest heavily in R&D. Every IMU we use is custom-built by us or for us. We don’t use anything off the shelf. We work with suppliers to develop and build a custom unit. That enables them to provide the performance we need at the right price points .

LM: How do you customize a MEMS IMU?

Trimble Indoor Mobile Mapping Solution, TIMMS

Hutton: At the board level the chips have a MEMS element and an ASIC (Application-Specific Integrated Circuit, an integrated circuit customized for a particular use). There’s a little computer running on there that does certain things to the signals—signal processing and calibration—and that’s the part we get customized. Even at the chip level, we’ll be testing these things. We’re saying, it’s not meeting our requirements, what’s going on here? We get them to correct things for us. We can do this at the chip level because we’re part of Trimble. Applanix is the inertial center of excellence for Trimble, so our mandate is not just to develop products for our P&L but to take our GNSS/IMU technology to the rest of Trimble and to understand its inertial requirements. We’ve done joint developments with the agricultural and construction divisions. The agricultural division is a heavy user of inertial components on autopilots, steering systems and implements, because you need to know relative motion and orientation. They sell a massive number of products per year, which gives us enormous buying power if we can use common components. This is why we are able to build our own IMUs at the board level, because we have economies of scale and enjoy competitive pricing. We go into the MEMS chip manufacturers and work with them to get the MEMS characteristics changed to Trimble’s requirements. Typically, these manufacturers target automotive and industrial applications, but we see interesting characteristics and work with them. Once it gets to a higher performance level no longer suitable for the mass market, we usually find someone to build it for us. We test products from half a dozen to ten different suppliers a year, just to make sure we’re on top of what’s out there. Then we narrow it down. It typically takes about two years to bring a new product to market, to get it customized to the point where it meets our specifications. Our customer’s expectation is a fresh product from us that gives better performance at good prices, or meets a performance goal that may not be there today.

That’s on the inertial side. On the GNSS side, of course, we’re part of Trimble. We are immersed heavily in GNSS development efforts, both software and hardware. We’re part of the team that ensures that GNSS software and hardware are always being pushed forward to be the best—and we bring that into our products. What other capabilities do we see our customers needing? Our post-processing software, POSPac, is a big element of how you get the best accuracy for mapping applications. We have a dedicated team working on POSPac full-time. Our products are not just GNSS/IMU, we call it aided inertial or multi-sensor fusion: new technologies for aiding the inertial beyond GNSS. That includes optical and lidar aiding of the navigation solution and what’s driving that is the autonomy space. Our products are heavily used in industrial autonomy solutions, or off-road applications, and we’re continuously seeing where we need to step it up in terms of solutions that are less GNSS-centric. GNSS is great, but when you lose lock or are indoors you need to constrain the position error growth and you can’t just use inertial as it drifts over time. We have developments in SLAM (simultaneous localization and mapping) and visual odometry (VO). All the technology we develop gets offered to our OEM customers, so if a customer has a lidar-based solution, we will say, here’s some capabilities in POSPac to add VO or SLAM input.

LM: You’re on top of it!

Hutton: We have to be. As we see inertial technology develop over time, the MEMS sensors get better. The holy grail is still a cheap, good gyro with very little drift and noise. There’s a big jump right now from MEMS gyros at $10/$100 to those at $1000, and there’s nothing in the middle. You want $1000 performance for $200. It’ll get there. The AV market will push the development: you need a good MEMS gyro to bridge any outages even with map matching. The $10 and $100 gyros are not quite good enough. The $1000 gyros are good enough, closer to FOG performance if you know what you’re doing, but are too expensive. They just haven’t developed something in the middle because they haven’t had a market for it before. But they can if they want. For avionics you can ask for more money, because quantities are lower, accuracy is higher, and certification is a must. Automotive doesn’t need high accuracy for airbags and so on, but autonomous vehicles are different—safety of life is an issue, so they need higher accuracy and eventually certification. But the volumes are enormous. So now there’s a market to justify the development of a higher accuracy gyro at a lower cost. They have the designs for the avionics market, but AV is the market they need as the justification to do the work to bring the cost down. We use the full range from cheap MEMS gyros all the way to expensive FOGs with 500 m windings. Just as we’ve taken the automotive technology and customized it for higher performance, now, if the suppliers attack the AV market, we can take that and enhance it for our applications.

The current breed of automotive MEMS sensors has enabled us to get into the UAV market You need less orientation accuracy, as the vectors to the ground are shorter, but you need low noise—even if you don’t have the highest accuracy but you have a high-performance, low-noise lidar sensor. That’s where we have focused and why we have different products. The APX-15 UAV is perfect for the Velodyne and similar sensors, because its error budget is within the noise level of the sensor—“in the snow”—whereas the APX-20 UAV can pull the best from the RIEGL VUX sensors. The APX-18 is the same as the APX-15 except that it has two-antennae heading for hovering applications, for blimps, façade scanning and things like that. That’s what differentiates us from our competitors, as well as the fact that we can explain to a customer how to do something—or why what they are doing won’t work!

LM: Is that science finished, i.e. the problem is solved?

Hutton: The science is still developing—just think of the number of satellites in the sky now. It means you can do all kinds of interesting things that you couldn’t in the past! One is the Post-Processed CenterPointTM RTX service, which is really taking off for aerial mapping. Precise point positioning (PPP) is the overall terminology for that technology, which is basically doing carrier-phase differential GNSS positioning but without base stations. CenterpointTM RTX is Trimble’s version of PPP and Post-Processed CenterpointTM RTX is the version embedded in POSPac. The concept of RTX is to have a network of stations around the world continuously monitoring everything that’s in the sky, coming up with precise ephemeris data in real time. So you are not waiting for International GNSS Service (IGS) products. At this point you have corrected everything about the satellites and you have to correct every other part of the chain: calibrate the receivers, calibrate the antennae. All Trimble’s base stations are the same, so there  are no errors there, whereas with IGS it’s all different. What error is left? The ambiguity, which is what you’re solving for, plus atmospheric and tropospheric delays, plus receiver noise etc. Trimble has come up with a global model for the delays—that’s the remaining thing to be solved for by the Trimble Kalman-filtering algorithms. If you can transmit these corrections in real time over L-band or Internet, you can start to do centimeter-level positioning without base stations. Now, it takes some time to resolve the ambiguities: it’s like the old days when you had to have float ambiguities and integer ambiguities, so with the standard global model it can take 15-20 minutes to converge to centimeter level. But they’ve just introduced Fast RTX by augmenting these stations so they have networks around the world with stations closer together. This allows local atmospheric models to be developed, including troposphere, and convergence as a result comes down to minutes. They’ve rolled it out in different parts of the world and are using it in automotive applications, for example the General Motors Super Cruise autonomous driving system uses the Trimble RTX service.

are no errors there, whereas with IGS it’s all different. What error is left? The ambiguity, which is what you’re solving for, plus atmospheric and tropospheric delays, plus receiver noise etc. Trimble has come up with a global model for the delays—that’s the remaining thing to be solved for by the Trimble Kalman-filtering algorithms. If you can transmit these corrections in real time over L-band or Internet, you can start to do centimeter-level positioning without base stations. Now, it takes some time to resolve the ambiguities: it’s like the old days when you had to have float ambiguities and integer ambiguities, so with the standard global model it can take 15-20 minutes to converge to centimeter level. But they’ve just introduced Fast RTX by augmenting these stations so they have networks around the world with stations closer together. This allows local atmospheric models to be developed, including troposphere, and convergence as a result comes down to minutes. They’ve rolled it out in different parts of the world and are using it in automotive applications, for example the General Motors Super Cruise autonomous driving system uses the Trimble RTX service.

So we’ve brought all that wonderful technology into POSPac. Applanix has access to that information and we can get rid of convergence delays since we are post-processing. It’s a subscription, paid six-monthly or annually. You click a button, the software goes out to the web, grabs the corrections and does the forward-reverse processing to get centimeter-level positioning. If you have Fast RTX, which takes minutes to converge, you can use it on UAVs, which have short trajectories. That’s where we are today in terms of the positioning state-of-the-art.

So we’ve brought all that wonderful technology into POSPac. Applanix has access to that information and we can get rid of convergence delays since we are post-processing. It’s a subscription, paid six-monthly or annually. You click a button, the software goes out to the web, grabs the corrections and does the forward-reverse processing to get centimeter-level positioning. If you have Fast RTX, which takes minutes to converge, you can use it on UAVs, which have short trajectories. That’s where we are today in terms of the positioning state-of-the-art.

LM: Is there anything about a UAV that can compromise that?

Hutton: Yes, you need to be in an RTX Fast region with local atmospheric models. Mostly that’s in Europe, but it’s growing in US and Canada. If you’re not in a Fast region, you may not have converged fully by the end of the trajectory. But you’ll still be pretty good. If the trajectory is not long enough, the combined result won’t be as accurate as with a longer trajectory. We recommend 15-20 minutes and you can even start it on the ground before you fly. We emphasize that you are solving for ambiguities and atmospheric delays, so you need clean data with few cycle slips. It’s like the old days of differential GPS. You can do that if you have a good design. Our OEM clients have built these solutions and their data is very clean. It’s very robust. All you need is an Internet connection. Over-the-air real-time RTX is also interesting: it’s the same accuracy as post-processed RTX, but you don’t get the forward-reverse ability to remove convergence. So the beginning of the flight will be less accurate than the end. However, people are starting to want real-time point clouds or they want the result when they land, but it doesn’t need to be the final accuracy—so 10 cm is OK. For example, monitoring a construction site, if you can get a 3D model to compare to the day before and can provide that information quickly, it’s good enough. The other example is rapid response or emergency response, for example landslides. We’re at the point of creating accurate point clouds in real time and people are starting to look at the problems that can solve. How does it affect my decision process? Why do I need to wait? Waiting typically is about accuracy, but you are getting pretty good accuracy in real time.

In photogrammetry you can’t do traditional aerial triangulation in real time. We had to re-educate people in the UAV segment on direct georeferencing: everyone bought a camera and push-button UAV photogrammetry software and said they could map! We asked them about GCPs, processing times, accuracy. We had to explain direct georeferencing. We discovered that these UAV-photogrammetry software packages didn’t import the exterior orientation (EO) georeferencing information correctly—they ignored the angles or de-weighted them so they were effectively ignored. You couldn’t get a solution without point matching, yet you already had the accurate angles—that’s frustrating. This is one of the reasons why we bundle our UAV products with Trimble UASMaster: it’s a powerful UAV-photogrammetry software solution that properly implements direct georeferencing support! With a lidar sensor and a calibrated camera, you can create colorized point clouds and orthos without any triangulation process. If it’s not a metric camera, or if you have mm GSD and cm GNSS accuracy, maybe triangulation will reduce the noise a bit, but typically people put only GNSS on and they solve every single time for camera and lens calibration and everything else, which constrains it in the air, and maybe constrains the average on the ground, yet it’s a big Jello! You need to know what you’re doing to get consistent accuracy. We train our photogrammetry customers: calibrate the camera, hold everything fixed, especially lens distortion, and if necessary just do a relative adjustment to refine any errors in EO, which is the traditional approach. It’s very efficient, you don’t need a lot of endlap and sidelap, and if you can’t match points it doesn’t matter, you still have EO! We’ve almost taken a step backward, but lidar is making us take a step forward. Lidar people ask, if I can create a point cloud to that level of accuracy without any adjustment, why can’t I do the same with a camera? Well, you can, but you have to have the right kind of camera and software. However, I think the way lidar’s going to go is automatic SLAM, another way of improving things. The ultimate solution is integrated sensor orientation for lidar and photogrammetry, where you use the best of everything and spit out the solution! We’re close and it’s a software matter. We’re working on that type of technology for AVs and we’ll bring it into the mapping segment as well. AVs are another real-time requirement, but post-processing can refine everything. We’re releasing a new lidar QC module for POSPac3, for example taking SLAM technology from the land side into the UAV segment—it uses SLAM, creates voxels from the lidar, does least-squares adjustment for boresight and, as an option, adjusts the trajectory and gets an adjusted SBET. The primary reason is to boresight the lidar automatically, but, also, if you’re having problems with the trajectory, you can use the lidar to fix it and use that same trajectory with the camera. Lidar point cloud adjustment is much, much faster than aerial triangulation.

LM: What about Trimble? The acquisition occurred some years ago, so there has been time to assess the benefits. Typically, acquisition gives the acquired company deeper pockets for development, but reduces innovation and nimbleness. On the other hand, it gives access by the acquired company to internal markets (other Trimble business areas), as well as a strong, global distribution network. To what extent is this true?

Hutton: That is all very true. Applanix is truly proud to be a Trimble company, since 2003. Applanix draws on Trimble’s global network of experts and deep industry knowledge, while Trimble’s related geospatial and autonomous technologies are complemented by Applanix’s leading positioning, orientation, localization and perception systems. Together, customers have access to the most advanced solutions to collect, manage and analyze complex information faster and easier, making them more productive, efficient and profitable.

Applanix’s industry leadership has always been predicated on our ability to innovate solutions for the evolving challenges of our partners. Trimble fully supports this. Our R&D teams are constantly pushing the limits of accuracy that can be obtained through almost any sensor in ever more challenging environments and developing software solutions that are practical, user-friendly but powerful enough to provide fast, efficient results. That’s how we stay fresh—we strive through the people and a deep understanding of the business and the technologies—and R&D. Let me support my arguments by citing the words of three customers:

“Applanix is a core market leader. Their AP boards are the main sensors used with the [SABRE-SCAN™] system, [and they] always deliver the same thing: highly accurate, dependable, repeatable results. Applanix has been very supportive: they’re quick to respond to support our needs through the development process, are reactive to suggestions, and are always evolving their technology to become more compact, lightweight, and easy to use.”

— Paul Edge, CEO, SABRE Advanced 3D Surveying Systems

“Our team has been very happy with Applanix’s products and support for the past nine years. The hardware, documentation and customer support are excellent. Applanix provides a well-designed, comprehensive solution.”

— David Hardy, geologist, Geological Survey Ireland

“Norsk Elektro Optikk has a very good relationship with Applanix. Both the sales team and the development team have been very helpful in our integration work. The development team has also included some features that we needed in the INS solution, and we are very grateful for that.”

— Trond Løke, Norsk Elektro Optikk.

LM: What happened to Applanix’s original principals?

Hutton: Blake Reid was a founder and president of Applanix before the Trimble purchase, and he stuck around for a while, went part-time, then retired around 2011. Dr. Bruno Scherzinger was also a founder and is currently our CTO. Bruno is also a Fellow in Trimble and takes a broader role. Our other founder Eric Lithopolous, who was instrumental in getting direct georeferencing accepted by the photogrammetric community, unfortunately passed away in 2014.

LM: We wanted to ask you, without going into deep mathematics, to show how Applanix’s approach, honed through decades of research by talented scientists and engineers, gives it an advantage. We haven’t done a deeply technical answer, but we have covered this.

Hutton: Yes, we’ve spoken about inertial, GNSS and our approach. The key point is that we like to play with things and try to help our customers. We understand the value to our customers and work with them to make them successful. For example, we’re bringing the lidar QC tools to market because there’s a definitive need. With the growth of lidar and integration, some tools are missing, such as easy-to-use boresighting. We did this with photogrammetry some years ago. We’re doing the same thing with lidar. The SBET adjustment is similar to photogrammetry.

LM: There are now ASPRS or USGS standards for lidar QC, for example differences between strips.

Hutton: We focus on the best possible georeferencing. Our job is to solve for the boresighting, then, if we can use some of the lidar data to improve the trajectory, we will. Next come the point clouds. Our output is not point clouds, it is boresights and, if you want, adjusted trajectory—the georeferencing! We stop there, because then you’re into the mapping part.

LM: What are your plans for the future in terms of markets? You’ve said that UAV is huge.

Hutton: It is, and so are AVs. We’ve developed the Autonomy Development Platform and are working on SLAM and VO capabilities for AVs as well. All the technology we’ve developed for UAV-lidar QC is applicable to AVs. Louis Nastro is heading up the effort and there’s an article in Autonomous Vehicle International4.

LM: How much of that is relevant for manned aircraft?

Hutton: With manned, it’s not so critical, because the solutions are pretty much done. A lot of smart people figured this out a long time ago. You’re open sky: you have perfect GNSS for positioning and FOG IMUs for orientation, so the lidar point clouds are excellent. You don’t want to do adjustment on these massive point clouds, but with UAVs the point clouds are smaller, and you might be flying between buildings or under a bridge, yet you want to map it. There is also 360° lidar. That’s where these tools are relevant. The same tools drive an industrial robot, inside or outside, automatically. We’re taking technology from AVs and putting it into UAV-lidar. At some point, the same technology can be used to fly the UAVs if there’s a market for that. In the future—judging by the direction UAVs are going—you’ll say, here’s my bridge, draw a little area on the computer, then the UAV will fly automatically, land, and there’s your map.

LM: With advances like deep learning and artificial intelligence (AI) it will go further, for example identifying a bolt on a bridge as too rusty.

Hutton: Based on the sensor configuration—lidar and camera—it will know where that bridge is, how to fly it, it will go up, it will do collision avoidance, use SLAM, and, when it lands, there may be a brief pause, a push to the Cloud, and then you’ll have the map. It will happen minutes later. Once you have the data, the accurate 3D model, the AI will do the inspection.

LM:Or compare the model with last year’s? And then a human has to make the difficult decision whether to fix something or wait till it falls down!

Hutton: So you can fly with lidar and cameras, but there’s still a manual aspect of flying. You’ll be able to say, “I have a bridge at this location—go survey it for me”. The technology is actually there. We’re getting closer and closer. The same technology that’s available for AVs today, industrial, on-road and off-road, is going into the UAVs, they’ll work out how to find the bridge and survey it, given the approximate location. It has incredible eyes—cameras—and SLAM in real time, so it can work everything out after, perhaps, an initial pass.

LM: You’ll still have a point cloud with millions of points?

Hutton: You’ll be pushing it to the Cloud, where all that filtering and so on will be going on. You’ll have a wonderful model for AI to work on. You have to think that AI will work as long as the data is collected consistently. We are working in agriculture as well, where temporal analysis is very important. Having millimeter resolution data enables you to make very smart decisions about fertilizing, watering, harvesting or getting rid of waste. The enabler is radiometry with accurate georeferencing. We work with a group at Purdue University and there are some high-value crops which you can assess by area not even by height. You can tell based on the area, even from an ortho, and spectral characteristics, when to harvest. And we have customers talking about high-value crops such as strawberries, where you even have to look under the leaves. But whatever you do, you have to be sure you are looking at the same plant (in a temporal comparison). There are high-value crops where the waste in the harvest is up to 30%: if you can reduce this to 10%, it’s worth millions of dollars. A crew will harvest everything regardless of whether it’s ready, so the better you know whether it’s ready, the better you can manage your business. It’s enabled by accurate geolocation and high-resolution imagery that you can get only from a UAV. You’re getting it from photogrammetry with a multispectral or NIR camera—for most of the applications all you need is an ortho. But we still need that centimeter level and we have to do it fast. We can’t be running GCPs and triangulation. You need to calibrate the camera and it has to be stable. We show them that, if you calibrate the camera, we get 2-3 cm absolute accuracy on the orthos day after day. So you can starting thinking about how you can scale this.

LM:What about indoor? Is there more to do there?

Hutton: Always! We have TIMMS, where the challenge is that the number of applications is just enormous, from a 2D floor plan all the way up to a 3D model for security, situational awareness, emergency response, but that market is still conservative as well—“I can live with a 2D floor plan”—because they don’t understand the value of better information. There are different aspects, from design to construction to life-cycle management. Building-management companies can pass on the survey costs to tenants! But they have to change the process, so at this point there’s no incentive. That’s commercial real estate. We did a lot of work on mapping buildings for first-responders, who relish situational awareness. The challenge is paying for it! Governments would have to mandate it and make the funds available. They don’t need to establish the value—it’s huge. You can save lives. You would have to legislate, for example to have it for new buildings.

LM: Joe, thank you very much indeed for these candid, informative insights into your company, its technologies and markets, and the trends it exemplifies.

The Applanix facility

Joe took me on a whistletop tour of the plant, a buzzing hive of activity and technology. Finally, he rolled up a door through to the adjoining warehouse, which Applanix has taken over. Most of it was a games area for employees, including a ping pong table, a basketball court and a tennis court! Joe explained that Applanix competes with the high-tech titans for the very best graduates in subjects such as electronics, electrical engineering and computer science, so it must offer top-drawer working conditions.

This area opened off the main facility, where we had seen R&D and testing areas, with lots of APX products. There are three inertial chips on an APX-15. The GNSS receiver is on the other side of the board. The APX-18 is 50% bigger. The APX-20 has two boards and an external IMU. They sell thousands of units per year, including sometimes hundreds to a single customer. This is a striking contrast to the high-end airborne and land systems, where annual sales would be in the hundreds. We saw the component design area and Joe explained that, when the design is final, Trimble can manufacture it in the required numbers, including offshore manufacture.

Applanix was coy about numbers, but Joe ventured that his part of Trimble has around 150 employees, of whom 120 are based in Richmond Hill. I said that this suggested annual sales of perhaps $45m, but Joe would not be drawn. He did add, however, that Kevin Perkins had exceeded $100m in accumulated sales over his career with Applanix.

Applanix tests numerous models of IMU, so that they understand the performance and potential of new units that come on the market. Joe showed me two vehicles in the facility, for testing GNSS/IMU systems and exploring AV technology. One was a medium-size sedan and the other, a van, the back of which was full of IMUs, one of which was the gold-standard, export-controlled Honeywell unit that Applanix uses to test all the others.

Endnote

Applanix has prospered, therefore, since the acquisition by Trimble, which provides it with GNSS components and services, as well as powerful channels for manufacturing, marketing and sales. Yet Applanix has retained certain elements more characteristic of a small company or start-up—top quality, talented and committed staff; healthy respect for R&D; progress through deep understanding of the technology, markets and customers’ needs; and willingness to turn trends in markets and technologies to best advantage. R&D plus continuous testing of components enables it to stay fresh—nothing is stale in the APX world! The company is riding the transformation from selling limited numbers of top-performance systems for manned aircraft and MMS applications to selling large quantities that give appropriate performance at realistic price points into newer markets, especially UAV-lidar and AVs. It plans to remain the world-leader in GNSS/IMU expertise, while incorporating into its products sophisticated enhancements such as SLAM and OD. Not only will this offer more capabilities in the UAV-lidar and AV worlds, but the attractions of the indoor market loom large. Indeed, as the interview took place, the company was limbering up for its upcoming 2019 Workshop on Airborne Mapping and Surveying, a two-day educational and networking event, run jointly with camera supplier Phase One. While this reflects today’s strong trend to user events as counterparts to professional conferences, it is better construed as a reflection on Applanix’s success and its exuberant growth into the AV/UAV space.

1 Cheves, M., 2008. Applanix: solutions for mobile mapping and positioning, The American Surveyor, 5(8): 20-29, September.

2 Our meeting took place prior to the unveiling of the CL-90 and CL-360 by Teledyne Optech.

3 This was released shortly after the interview.

4 Nastro, L., 2019. Point of view, Autonomous Vehicle International, October, 54-55. Reproduced at https://www.applanix.com/news/Auton-Vehicle-Intl_Nastro_Oct2019.pdf.