Recently I heard someone describe my organization as a 36 year-old startup company. That is a pretty good way to define what is going on here.

When someone new joins our team, I give them this advice: Find a way to remain relevant. Search for ways to explore your true potential. Push past the barriers that are limiting your current level of experience and understanding. In other words, try everything, fear nothing.

Editor’s note: A PDF of this article as it appeared in the magazine is available HERE.

Today more than ever, remaining relevant has become the proverbial “drinking from a fire hose.” The pace of innovation has surpassed anything that I have seen in my almost four decades in the Geospatial Data Industry. We are experiencing rapid change on a daily basis. New companies, new sensors, new ways to visualize, new markets and new delivery mechanisms. The need for the most current knowledge and understanding can sometimes become one of the biggest obstacles to starting a new project. One has to consider specializing in a single methodology and becoming an expert in it, while at the same time fearing that a chosen path could lead to obsolescence in just a few years. Change is a given.

The Ancient Art of Mobile Data Collection

When we added lidar to our mobile platform in 2007 and took on an incredibly large 90,000-mile project in early 2008, by default it put us on the map as a leader in this realm. There were very few practical solutions or tools available to us at the time. We had to innovate and build the collection vehicle, write the reduction platform software, and create a delivery mechanism for the 3D data.

Our organization grew by a factor of 5 in just six months. The experience nearly killed us. But what came out on the other side was an industry juggernaut. Since that early experience, we have gone on to collect and reduce hundreds of thousands of miles of 3D data for more than 30 state and local departments of transportation across the North American continent.

With the evolution and increased consumption of 3D data across the globe, other companies decided to capitalize on its value and joined the fray. Collectors like Google, Navteq/Nokia/Here, TomTom, Microsoft, Apple etc., advanced their platforms with the addition of lidar, and 3D data quickly became a commodity. To remain relevant, we had to look at raising the bar and finding a niche. The position we held in the DOT market turned out to be our best bet.

Fast forward to today. With hundreds of thousands of miles of processed 3D data in hand from large network-level DOT projects, we asked ourselves: could there be other ways to create value from such a large reservoir of data?

“With great vision comes great understanding…of the next transition. This one is going to change our whole way of doing business. Again.”

We had inquiries from the AI community. They were looking for large, quantified 3D datasets of roadways to test their automated analysis algorithms. It was obvious that most of this research was coming from people who were trying to make their mark in the arena of self-driving vehicles. We knew our role was never going to lead us to building an automated vehicle platform, but it became apparent that there was something new on the horizon. A need for a certain type of fuel that we had an abundance of: 3D Maps!

The opportunity opened our eyes to a plethora of new relationships and strengthened our established partnerships. But to remain relevant, we had to pick up our pace. Innovation in this sector was happening at breakneck speed and we found ourselves standing in the middle of several intersections. The only way to sort and absorb the massive knowledge stream was to find the common threads and stick to things to which we could add value.

We discovered several opportunities where the 3D Map was going to have an important role. In Advanced Driver Assistance Systems (ADAS), the Map would be a guide, giving vehicles a sense of place.

We specialize in 3D infrastructure and asset maps which can be used to describe the complications and other options that an ADAS system would need to consider outside of just driving a straight line. The data would also assist in the design and modification of infrastructure with new technologies and more relevant safety features.

The next step was to find a way to take the output from existing collection efforts and distill it into known formats like Autoware and OpenDRIVE. We also believe the Map can carry other relevant information related to safety and fuel efficiency. For example, a roughness index, the coefficient of friction and a safety confidence rating can be included in it. The knowledge of fiduciary signs that help give guidance and accuracy to location are another piece of the puzzle to be embedded in the Map for autonomous vehicles.

Do we have this figured out? To a degree. It gives us a revenue stream that should keep the company around for another five years. But, with great vision comes great understanding . . . of the next transition. This one is going to change our whole way of doing business. Again.

The Dynamic Map of the Future

The path to the Map is going to see major disruptions. It will become dynamic. It will update in real time. Those updates will come from the output of lane-keep sensor packages that actually use the Map. This is what companies like Mobileye have in mind, and with millions of systems already in place, the writing is on the wall. Many of the automobile companies understand this methodology and are preparing by working with companies that can create sensor packages that keep a car in its designated place and update the Map instantly.

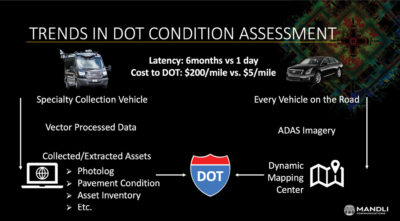

This will create a dilemma for companies that do cradle to grave transportation maps and asset inventory for government agencies. The need for direct-to-drive collection will diminish except for special projects.

For a DOT, instead of putting out a bid to have asset and pavement data collection done, and waiting six to twelve months for the results, they will have daily, if not hourly, updates on infrastructure performance and the cost will drop considerably. Most of the data will come from cars and trucks moving down the roadways.

As a company, we are adjusting to this new reality by learning to source data from many different origins including current competitors. There are attempts to control disparate datasets by hoarding and housing them in secure cloud environments to limit access. Our hope is that all public data can remain accessible and be used to create a new and enhanced understanding of resources needed for mobility.

In the end, there is still a lot of opportunity to distill pertinent information and knowledge from the data that is used outside of the logistics of the New Mobility ecosystem. Initiatives are being implemented to understand how the capacity of infrastructure can be maximized and monetized. One of my favorite examples comes from a white paper titled “An action plan to realize the visions outlined in the Urban Mobility for a Digital Age and Great Streets for Los Angeles: 2018-2020 Strategic Plan”. 1

LA County has basically defined how they will “Code the Curb” as a means to determine pricing for the use of public infrastructure. Geospatial data will become the cornerstone to the Mobility Data Specification (MDS).

It’s a plan designed to deliver digital services and information to mobility providers at all levels. Any municipality could implement the features of this document to address the burdens and growth of the New Mobility. The study includes a look at Micro Mobility—scooters and bikes, Shared Mobility—ride sharing services, Mobility Hubs—Seamless Trips from Transit to last mile, and Transportation Happiness.

As dollars from parking and permitting continue to decline, this model will give a municipality a way to understand how they can monetize their infrastructure to produce new revenue streams.

The good news here is that there is a lot of curb to be coded and that will be a good source of work for my company well into the future. But it will be an evolution.