In his book, Digital Photogrammetry, Prof. Toni Schenk writes that “Photogrammetry and cats share a common, most important trait: both have several lives”1. This truly describes the technology progression in the photogrammetry industry, which has very rapidly transitioned from analog, semi-analytical, analytical, digital, and soft-copy, to UAV-based photogrammetry for mapping small areas at a low cost. All that is required is a drone that can carry a camera and that images be captured with high overlap/sidelap to cover the area. Processing is handled by readily available commercial photogrammetry software. Accuracy can be improved using better lenses, flying at altitudes that keep image scale consistent between images, and utilizing more and/or less overlap/sidelap. Structure from Motion (SfM) has also emerged as a simplified version of photogrammetry that can create a relative 3D model quickly. Despite the success of low-cost UAV photogrammetry systems, this technology has its challenges, which can be categorized as either operational or principle. We will discuss these challenges and the solutions offered by Geodetics’ Mobile Mapping System (Geo-MMS) family of products.

Operational challenges of UAV photogrammetry include: the time-consuming survey of ground control points (GCPs) in and around the area of interest (AOI); considering the number of images captured to cover a particular AOI with the up-to 90% overlap/sidelap required by photogrammetry, locating these GCPs on the captured images can be a time-consuming effort; and processing time can be a day or more.

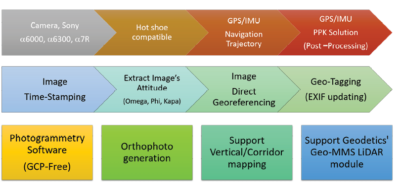

To overcome these operational challenges, Geodetics’ Geo-Photomap product integrates precise GPS/IMU data with an onboard camera, resulting in direct georeferencing of the captured images without the need for GCPs, as well as significantly reduced overlap/sidelap requirements. The key in this process is to tightly control camera-shutter triggering and accurate time-tagging of the captured images for correlation with the inertial solution. The exterior orientation parameters (EOP) of the images, including geographical position and omega, phi and kappa, are interpolated/extrapolated from the GPS/IMU trajectory and added to the EXIF image headers. Once this is complete, the time/geo-tagged images are processed by commercially available photogrammetry packages. This approach also overcomes the problem, encountered in both corridor and vertical structure mapping, of the unavailability of GCPs, as GCPs are not required in this approach. A flowchart of the approach is shown in Figure 1.

Figure 2: UAV photogrammetry: examples where photogrammetry fails: scale mismatch and missing data under foliage, trees and canopy.

The principle challenges of UAV photogrammetry include low base-to-height-ratio and short parallaxes, which affect vertical resolution/accuracy. UAV photogrammetry also struggles to perform in areas with low-to-no texture such as foliage, canopy, icefields and flat desert areas. Scale mismatches of more than 20% can cause processing to stop. This problem is due to a shortage in the parallax around high landmarks, such as cliffs or towers in which the relief displacement exceeds the image dimension and predefined scale. Figure 2 illustrates the principle challenges. For many vertical structures including power poles or trees, point clouds in the vertical component are cut short, and for the areas around foliage, they appear black. Variations in ground altitude can lead to mismatched scale between images, and thus gaps in the scanned area.

To overcome the principle challenges, a lidar sensor can be used as a promising alternative. A lidar aerial mapping payload typically includes a high-performance inertial navigation system coupled with an on-board lidar sensor. Raw lidar data is processed in real-time or with post-mission software to provide accurate, directly georeferenced lidar point clouds. The lidar sensor comes with advantages, such as the ability to map areas with foliage or high canopy, as well as generate DTM/DSM even in areas with no-to-low texture.

Considering the high horizontal resolution provided by photogrammetry and the high vertical resolution provided by lidar, it makes sense to try to merge these two sensors. A combination of point (lidar) and pixel (camera) provides the best of image-based photogrammetry and lidar mapping. The advantages of this integration are: enhanced visualization; lidar point-cloud colorization, which facilitates lidar point-cloud classification due to the availability of the RGB information; enhanced DTM/DEM extraction after removing the classified objects; orthophoto generation in areas where photogrammetry fails (i.e., glaciers, tree canopy, mine site conveyors, etc.); and enhanced change detection. Figure 4 shows the integration of point and pixel data, generated by Geodetics’ Point&Pixel™ system.

To illustrate the advantages of Point&Pixel integration, Figure 5 presents a case study comparing the three approaches: classical UAV photogrammetry with high overlap/sidelap using GCPs for geo-referencing; Geo-Photomap GCP-free photogrammetry with geo-tagged images and reduced overlap; and Point&Pixel (colorized lidar point clouds). The RMS values on 20 check points distributed throughout the AOI show similar accuracies for classic UAV photogrammetry and Geo-Photomap. With Geo-Photomap, however, the mission was completed in approximately 25% of the time of the classic approach due to the reduced need for sidelap coverage; furthermore, the data processing time was reduced from days to only 3 hours. This is feasible due to Geo-Photomap’s ability to provide image attitude information (omega, phi, kappa) to the photogrammetry processing. The Point&Pixel approach provided accuracy better than 5 cm.

As Schenk rightly predicted, many new technologies are developing around UAV photogrammetry, including remote sensing and multispectral/thermal mapping (e.g. NDVI, EVI and IR indices) used in agriculture/forest management and environmental/disaster preparedness. The majority of existing UAV multispectral systems still collect and process data using the classic photogrammetry approach with GCPs and flights over relatively small areas due to high overlap/sidelap. This approach is not as relevant for disaster preparedness applications, such as wildfire mapping, flood modeling, river survey, ecology and geology, which in many instances preclude the distribution and measurement of GCPs. In these cases, the Geo-Photomap approach can be used to relax the requirements of GCP surveying and flying with large overlap/sidelap to enable mapping of large areas. Geodetics has added a multispectral capability providing spectral classification with all the advantages previously described. Figure 6 shows the full Geo-MMS suite.

1 Schenk, T., 1999. Digital Photogrammetry, Volume I: Background, Fundamentals, Automatic Orientation Procedures, Terra Science, Laurelville, Ohio, 428 pp: p vii.

Shahram Moafipoor is a senior navigation scientist and director of research and development at Geodetics Inc. in San Diego, California, focusing on new sensor technologies and sensor-fusion architectures. Dr. Moafipoor holds a PhD from The Ohio State University.

Lydia Bock is the President and Chief Executive Officer (CEO) of Geodetics. In 2011, Dr. Bock was honored as CEO of one of the 50 fastest woman owned/led companies in North America by the Women President’s Organization (WPO)Dr. Bock holds an M.Sc. from The Ohio State University and a Ph.D. from Massachusetts Institute of Technology.

Jeff Fayman serves as Vice President of Business and Product Development at Geodetics. Dr. Fayman holds a B.A. in Business Administration and a M.Sc. in Computer Science, both from San Diego State University. He holds a Ph.D. in Computer Science from the Technion—Israel Institute of Technology.